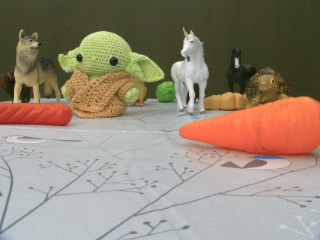

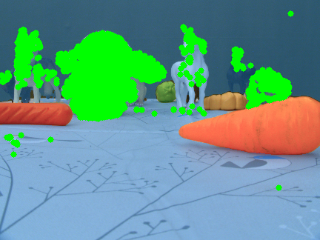

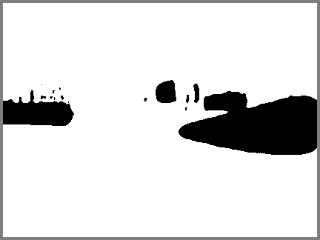

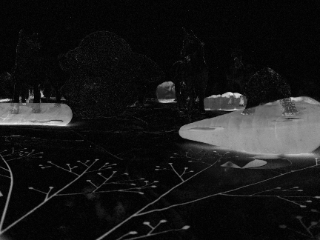

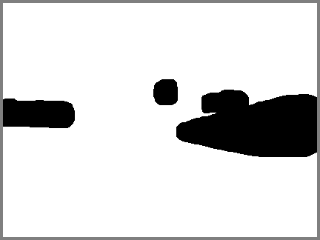

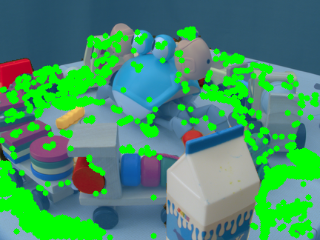

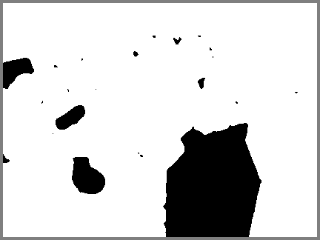

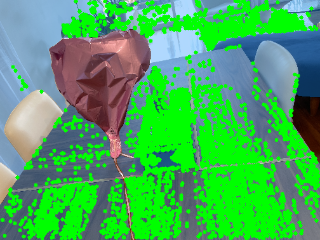

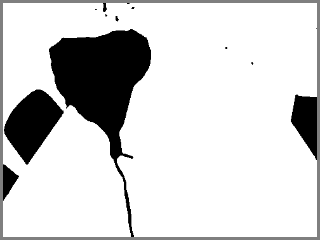

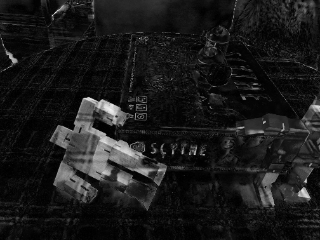

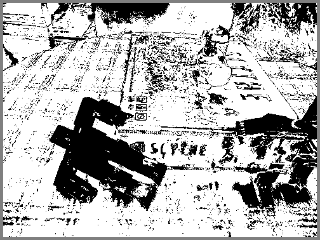

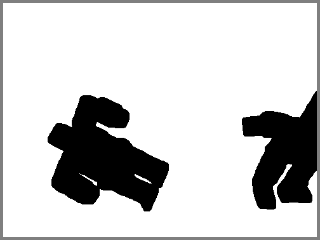

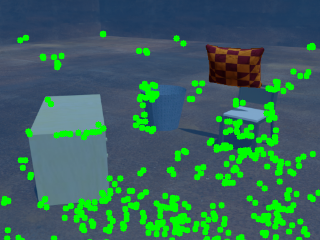

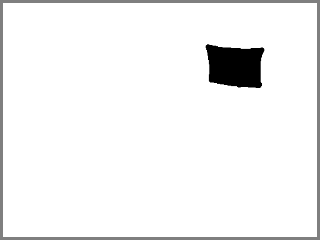

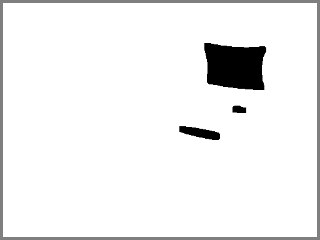

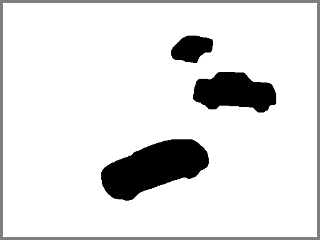

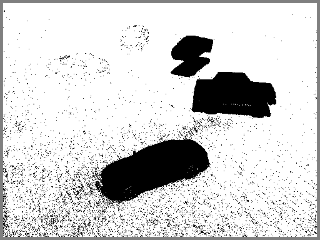

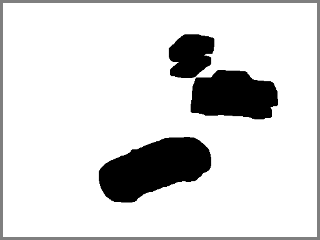

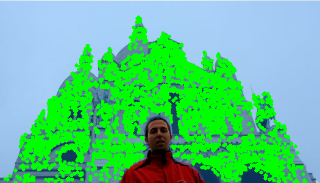

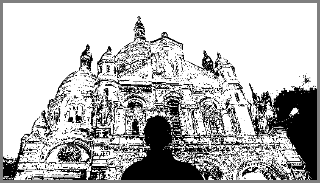

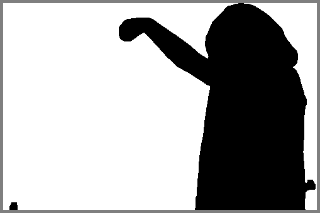

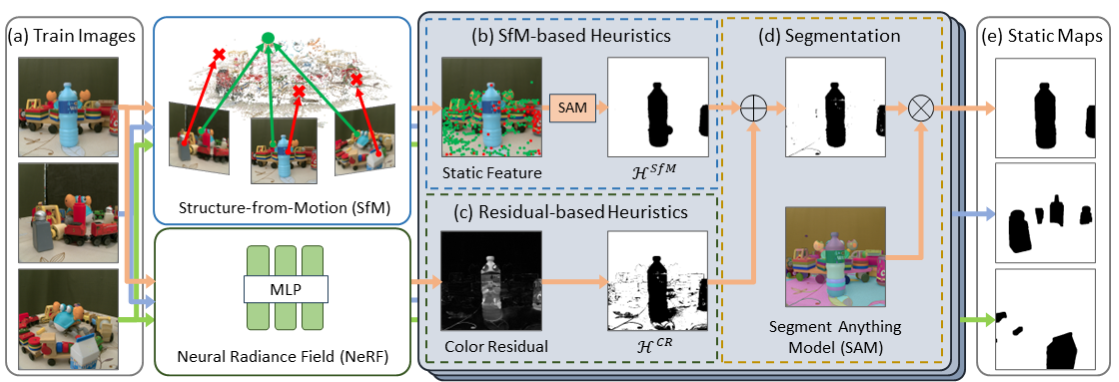

Pipeline of HuGS: (a) Given unordered images of a static scene disturbed by transient distractors as input, we first obtain two types of heuristics. (b) SfM-based heuristics use SfM to distinguish between static (green) and transient features (red). The static features are then employed as point prompts to generate dense masks using SAM. (c) Residual-based heuristics are based on a partially trained NeRF (ie, trained for several thousands of iterations) that can provide reasonable color residuals. (d) Their combination finally guides SAM again to generate (e) the static map for each input image.

Visualization: Here are examples of HuGS on different scenes (datasets). More results can be found in the paper and the data.